#unstructured web data

Explore tagged Tumblr posts

Text

How to reduce product returns with Digital shelf analytics

Discover how Digital shelf analytics can help minimize product returns to transform your retail success. Dive in for actionable strategies. Read more https://xtract.io/blog/how-to-reduce-product-returns-with-digital-shelf-analytics/

0 notes

Text

I think people need to stop using the ‘AI is bad at [thing]’ as an argument against the lack of regulation. If you actually use a certain type of AI for its intended purpose, you will realise quite quickly that it is usually competent.

AI used to identify objects in photos so that you don’t get bees when you search ‘dog’ is actually quite good at what it does.

AI that corrects your spelling and grammar is actually quite good at what it does when you're not drunk off your tits and sending actual gibberish

AI that retrieves information on the internet so you can search ‘what do you call the things on a giraffe’s head?’ and find relevant articles explaining giraffe anatomy even if they don’t contain those words is actually quite good at what it does.

AI that mimics human language structure in text form [yes, like ChatGPT] is actually quite good at what it does. It was never meant to give you information, it was meant to mimic human text.

AI that summarises the content of internet search results [yes, like Gemini] is actually quite good at what it does.

Yes, this is about the ‘is it 2025’ post. If I search ‘what do you call the things on a giraffe’s head?’, it is able to tell me not only that they are called ossicones but also that sources commonly call them ‘horns’. It is able to look at all the information in its millions of search results, identify what information is most likely to be accurate, what information is most relevant, what information is conflicting, and provide a summary of that.

Let's pretend we are Gemini. We receive a prompt in the form of a question: is it 2025? We plug that into Google's search engine and get about 25 270 000 000 results (that's a 25 billion by the way). We have no idea that this is a 'pick the most recent information' situation and in all honesty it isn't one. We are relying on Google's unstructured search algorithm that, let's remember, is trained on your web activity (this is why we all get different answers). We have two things we can do here depending on the resources at our disposal:

1) If we recognise we are on a mobile with location services enabled and we have access to the system data, we can simply spit that out (on my mobile, it told me the full date (1st June 2025), the year (2025), that it retrieved it from the system, and the first half of my postal code. If I disable chrome's access to my location data, it behaves exactly like the second case).

2) If we do not have access to the system data (eg. laptop browser on incognito mode with all location services disabled), we use our proprietary algorithm and training to identify two sources among relatively conflicting information that agree and that we deem are representative of the best results. One is Wikipedia which states that 2025 is the current year and another is a random and bizarre instagram post about the Gregorian calendar. According to our algorithm, we believe these are most representative (likely because the majority of initial results are in fact social media new years posts), so we declare the year is 2025.

TL;DR Gemini is not meant to answer this sort of question, Google knows this (that's why Gemini doesn't pop up if you ask for the date), and Google probably doesn't care too much because Gemini is good at its actual job. Calling it stupid and useless is not a functional argument because it's simply untrue and this goes for so many AI models. We need to start actually calling them out for the reasons we think they need regulation.

2 notes

·

View notes

Text

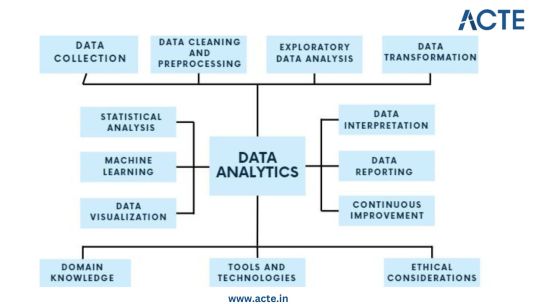

In the subject of data analytics, this is the most important concept that everyone needs to understand. The capacity to draw insightful conclusions from data is a highly sought-after talent in today's data-driven environment. In this process, data analytics is essential because it gives businesses the competitive edge by enabling them to find hidden patterns, make informed decisions, and acquire insight. This thorough guide will take you step-by-step through the fundamentals of data analytics, whether you're a business professional trying to improve your decision-making or a data enthusiast eager to explore the world of analytics.

Step 1: Data Collection - Building the Foundation

Identify Data Sources: Begin by pinpointing the relevant sources of data, which could include databases, surveys, web scraping, or IoT devices, aligning them with your analysis objectives. Define Clear Objectives: Clearly articulate the goals and objectives of your analysis to ensure that the collected data serves a specific purpose. Include Structured and Unstructured Data: Collect both structured data, such as databases and spreadsheets, and unstructured data like text documents or images to gain a comprehensive view. Establish Data Collection Protocols: Develop protocols and procedures for data collection to maintain consistency and reliability. Ensure Data Quality and Integrity: Implement measures to ensure the quality and integrity of your data throughout the collection process.

Step 2: Data Cleaning and Preprocessing - Purifying the Raw Material

Handle Missing Values: Address missing data through techniques like imputation to ensure your dataset is complete. Remove Duplicates: Identify and eliminate duplicate entries to maintain data accuracy. Address Outliers: Detect and manage outliers using statistical methods to prevent them from skewing your analysis. Standardize and Normalize Data: Bring data to a common scale, making it easier to compare and analyze. Ensure Data Integrity: Ensure that data remains accurate and consistent during the cleaning and preprocessing phase.

Step 3: Exploratory Data Analysis (EDA) - Understanding the Data

Visualize Data with Histograms, Scatter Plots, etc.: Use visualization tools like histograms, scatter plots, and box plots to gain insights into data distributions and patterns. Calculate Summary Statistics: Compute summary statistics such as means, medians, and standard deviations to understand central tendencies. Identify Patterns and Trends: Uncover underlying patterns, trends, or anomalies that can inform subsequent analysis. Explore Relationships Between Variables: Investigate correlations and dependencies between variables to inform hypothesis testing. Guide Subsequent Analysis Steps: The insights gained from EDA serve as a foundation for guiding the remainder of your analytical journey.

Step 4: Data Transformation - Shaping the Data for Analysis

Aggregate Data (e.g., Averages, Sums): Aggregate data points to create higher-level summaries, such as calculating averages or sums. Create New Features: Generate new features or variables that provide additional context or insights. Encode Categorical Variables: Convert categorical variables into numerical representations to make them compatible with analytical techniques. Maintain Data Relevance: Ensure that data transformations align with your analysis objectives and domain knowledge.

Step 5: Statistical Analysis - Quantifying Relationships

Hypothesis Testing: Conduct hypothesis tests to determine the significance of relationships or differences within the data. Correlation Analysis: Measure correlations between variables to identify how they are related. Regression Analysis: Apply regression techniques to model and predict relationships between variables. Descriptive Statistics: Employ descriptive statistics to summarize data and provide context for your analysis. Inferential Statistics: Make inferences about populations based on sample data to draw meaningful conclusions.

Step 6: Machine Learning - Predictive Analytics

Algorithm Selection: Choose suitable machine learning algorithms based on your analysis goals and data characteristics. Model Training: Train machine learning models using historical data to learn patterns. Validation and Testing: Evaluate model performance using validation and testing datasets to ensure reliability. Prediction and Classification: Apply trained models to make predictions or classify new data. Model Interpretation: Understand and interpret machine learning model outputs to extract insights.

Step 7: Data Visualization - Communicating Insights

Chart and Graph Creation: Create various types of charts, graphs, and visualizations to represent data effectively. Dashboard Development: Build interactive dashboards to provide stakeholders with dynamic views of insights. Visual Storytelling: Use data visualization to tell a compelling and coherent story that communicates findings clearly. Audience Consideration: Tailor visualizations to suit the needs of both technical and non-technical stakeholders. Enhance Decision-Making: Visualization aids decision-makers in understanding complex data and making informed choices.

Step 8: Data Interpretation - Drawing Conclusions and Recommendations

Recommendations: Provide actionable recommendations based on your conclusions and their implications. Stakeholder Communication: Communicate analysis results effectively to decision-makers and stakeholders. Domain Expertise: Apply domain knowledge to ensure that conclusions align with the context of the problem.

Step 9: Continuous Improvement - The Iterative Process

Monitoring Outcomes: Continuously monitor the real-world outcomes of your decisions and predictions. Model Refinement: Adapt and refine models based on new data and changing circumstances. Iterative Analysis: Embrace an iterative approach to data analysis to maintain relevance and effectiveness. Feedback Loop: Incorporate feedback from stakeholders and users to improve analytical processes and models.

Step 10: Ethical Considerations - Data Integrity and Responsibility

Data Privacy: Ensure that data handling respects individuals' privacy rights and complies with data protection regulations. Bias Detection and Mitigation: Identify and mitigate bias in data and algorithms to ensure fairness. Fairness: Strive for fairness and equitable outcomes in decision-making processes influenced by data. Ethical Guidelines: Adhere to ethical and legal guidelines in all aspects of data analytics to maintain trust and credibility.

Data analytics is an exciting and profitable field that enables people and companies to use data to make wise decisions. You'll be prepared to start your data analytics journey by understanding the fundamentals described in this guide. To become a skilled data analyst, keep in mind that practice and ongoing learning are essential. If you need help implementing data analytics in your organization or if you want to learn more, you should consult professionals or sign up for specialized courses. The ACTE Institute offers comprehensive data analytics training courses that can provide you the knowledge and skills necessary to excel in this field, along with job placement and certification. So put on your work boots, investigate the resources, and begin transforming.

24 notes

·

View notes

Text

Healthcare Market Research: Why Does It Matter?

Healthcare market research (MR) providers interact with several stakeholders to discover and learn about in-demand treatment strategies and patients’ requirements. Their insightful reports empower medical professionals, insurance companies, and pharma businesses to engage with patients in more fulfilling ways. This post will elaborate on the growing importance of healthcare market research.

What is Healthcare Market Research?

Market research describes consumer and competitor behaviors using first-hand or public data collection methods, like surveys and web scraping. In medicine and life sciences, clinicians and accessibility device developers can leverage it to improve patient outcomes. They grow faster by enhancing their approaches as validated MR reports recommend.

Finding key opinion leaders (KOL), predicting demand dynamics, or evaluating brand recognition efforts becomes more manageable thanks to domain-relevant healthcare market research consulting. Although primary MR helps with authority-building, monitoring how others in the target field innovate their business models is also essential. So, global health and life science enterprises value secondary market research as much as primary data-gathering procedures.

The Importance of Modern Healthcare Market Research

1| Learning What Competitors Might Do Next

Businesses must beware of market share fluctuations due to competitors’ expansion strategies. If your clients are more likely to seek help from rival brands, this situation suggests failure to compete.

Companies might provide fitness products, over-the-counter (OTC) medicines, or childcare facilities. However, they will always lose to a competitor who can satisfy the stakeholders’ demands more efficiently. These developments evolve over the years, during which you can study and estimate business rivals’ future vision.

You want to track competing businesses’ press releases, public announcements, new product launches, and marketing efforts. You must also analyze their quarter-on-quarter market performance. If the data processing scope exceeds your tech capabilities, consider using healthcare data management services offering competitive intelligence integrations.

2| Understanding Patients and Their Needs for Unique Treatment

Patients can experience unwanted bodily changes upon consuming a medicine improperly. Otherwise, they might struggle to use your accessibility technology. If healthcare providers implement a user-friendly feedback and complaint collection system, they can reduce delays. As a result, patients will find a cure for their discomfort more efficiently.

However, processing descriptive responses through manual means is no longer necessary. Most market research teams have embraced automated unstructured data processing breakthroughs. They can guess a customer’s emotions and intentions from submitted texts without frequent human intervention. This era of machine learning (ML) offers ample opportunities to train ML systems to sort patients’ responses quickly.

So, life science companies can increase their employees’ productivity if their healthcare market research providers support ML-based feedback sorting and automation strategies.

Besides, hospitals, rehabilitation centers, and animal care facilities can incorporate virtual or physical robots powered by conversational artificial intelligence (AI). Doing so is one of the potential approaches to addressing certain patients’ loneliness problems throughout hospitalization. Utilize MR to ask your stakeholders whether such integrations improve their living standards.

3| Improving Marketing and Sales

Healthcare market research aids pharma and biotechnology corporations to categorize customer preferences according to their impact on sales. It also reveals how brands can appeal to more people when introducing a new product or service. One approach is to shut down or downscale poorly performing ideas.

If a healthcare facility can reduce resources spent on underperforming promotions, it can redirect them to more engaging campaigns. Likewise, MR specialists let patients and doctors directly communicate their misgivings about such a medicine or treatment via online channels. The scale of these surveys can extend to national, continental, or global markets. It is more accessible as cloud platforms flexibly adjust the resources a market research project may need.

With consistent communication involving doctors, patients, equipment vendors, and pharmaceutical brands, the healthcare industry will be more accountable. It will thrive sustainably.

Healthcare Market Research: Is It Ethical?

Market researchers in healthcare and life sciences will rely more on data-led planning as competition increases and customers demand richer experiences like telemedicine. Remember, it is not surprising how awareness regarding healthcare infrastructure has skyrocketed since 2020. At the same time, life science companies must proceed with caution when handling sensitive data in a patient’s clinical history.

On one hand, universities and private research projects need more healthcare data. Meanwhile, threats of clinical record misuse are real, having irreparable financial and psychological damage potential.

Ideally, hospitals, laboratories, and pharmaceutical firms must inform patients about the use of health records for research or treatment intervention. Today, reputed data providers often conduct MR surveys, use focus groups, and scan scholarly research publications. They want to respect patients’ choice in who gets to store, modify, and share the data.

Best Practices for Healthcare Market Research Projects

Legal requirements affecting healthcare data analysis, market research, finance, and ethics vary worldwide. Your data providers must recognize and respect this reality. Otherwise, gathering, storing, analyzing, sharing, or deleting a patient’s clinical records can increase legal risks.

Even if a healthcare business has no malicious intention behind extracting insights, cybercriminals can steal healthcare data. Therefore, invest in robust IT infrastructure, partner with experts, and prioritize data governance.

Like customer-centricity in commercial market research applications, dedicate your design philosophy to patient-centricity.

Incorporating health economics and outcomes research (HEOR) will depend on real-world evidence (RWE). Therefore, protect data integrity and increase quality management standards. If required, find automated data validation assistance and develop or rent big data facilities.

Capture data on present industry trends while maintaining a grasp on long-term objectives. After all, a lot of data is excellent for accuracy, but relevance is the backbone of analytical excellence and business focus.

Conclusion

Given this situation, transparency is the key to protecting stakeholder faith in healthcare data management. As such, MR consultants must act accordingly. Healthcare market research is not unethical. Yet, this statement stays valid only if a standardized framework specifies when patients’ consent trumps medical researchers’ data requirements. Healthcare market research is not unethical. Yet, this statement stays valid only if a standardized framework specifies when patients’ consent trumps medical researchers’ data requirements.

Market research techniques can help fix the long-standing communication and ethics issues in doctor-patient relationships if appropriately configured, highlighting their importance in the healthcare industry’s progress. When patients willingly cooperate with MR specialists, identifying recovery challenges or clinical devices’ ergonomic failures is quick. No wonder that health and life sciences organizations want to optimize their offerings by using market research.

3 notes

·

View notes

Text

Enhancing customer experiences with AI - Sachin Dev Duggal

The CX Challenge: From Data Deluge to Personalized Insights

Improving the customer experience has always been concerning for businesses, and the problem comes with harnessing those vast volumes generated through every interaction – web clicks, social network engagements, problem-solving conversations, and others. Usually, such data deluge is scattered and unstructured making it difficult to extract meaningful insights needed for strategic CX decisions.

This is where AI steps in. AI integrated with Machine Learning (ML) possesses unique strengths in pattern recognition and data analysis; they can sift through mountains of customer data revealing hidden trends and preferences. Imagine being able to predict customer needs proactively, personalize marketing campaigns in real-time, or identify at-risk customers before they churn.

There’s no doubt that personalization is one of the areas where AI solutions leave indelible marks. Modern users demand personalized experiences within the realm of CX since they anticipate exclusive responses corresponding to their distinct preferences. By crafting customized interactions across various touchpoints from targeted marketing campaigns to intuitive user interfaces, Builder AI under supervision by Sachin Dev Duggal facilitates tailored customer experience. Through predictive analytics as well as machine learning algorithms, businesses can accurately predict customer needs thereby building deeper relationships leading to brand loyalty.

Symphony of Customer Interactions and Customer Satisfaction

Not all AI solutions are created equal. In order to have an impact on CX transformation, we need AI that goes beyond mere automation. This is where AI comes into its own. Platforms like Sachin Dev Duggal’s Builder AI go above and beyond pre-programmed NLP-powered chatbots or basic analytics by enabling businesses to develop and manage a complete AI ecosystem for CX. Think of it as a conductor, harmonizing customer interactions.

AI is not a passing trend; it's a revolution in the making. By implementing AI, businesses can set a new bar of customer satisfaction, fostering agility, efficiency, and a culture of innovation.

#builder.ai#AI#artificial intelligence#author sachin duggal#builder ai#builder ai news#business#sachin dev duggal#Sachin Duggal#sachin dev duggal author#sachin dev duggal builder.ai#sachin dev duggal ey#sachin dev duggal news#sachin duggal builder.ai#sachin duggal#sachin duggal ey#sachindevduggal#sachinduggal#tech learning#technology#software development

2 notes

·

View notes

Text

Navigating the Full Stack: A Holistic Approach to Web Development Mastery

Introduction: In the ever-evolving world of web development, full stack developers are the architects behind the seamless integration of frontend and backend technologies. Excelling in both realms is essential for creating dynamic, user-centric web applications. In this comprehensive exploration, we'll embark on a journey through the multifaceted landscape of full stack development, uncovering the intricacies of crafting compelling user interfaces and managing robust backend systems.

Frontend Development: Crafting Engaging User Experiences

1. Markup and Styling Mastery:

HTML (Hypertext Markup Language): Serves as the foundation for structuring web content, providing the framework for user interaction.

CSS (Cascading Style Sheets): Dictates the visual presentation of HTML elements, enhancing the aesthetic appeal and usability of web interfaces.

2. Dynamic Scripting Languages:

JavaScript: Empowers frontend developers to add interactivity and responsiveness to web applications, facilitating seamless user experiences.

Frontend Frameworks and Libraries: Harness the power of frameworks like React, Angular, or Vue.js to streamline development and enhance code maintainability.

3. Responsive Design Principles:

Ensure web applications are accessible and user-friendly across various devices and screen sizes.

Implement responsive design techniques to adapt layout and content dynamically, optimizing user experiences for all users.

4. User-Centric Design Practices:

Employ UX design methodologies to create intuitive interfaces that prioritize user needs and preferences.

Conduct usability testing and gather feedback to refine interface designs and enhance overall user satisfaction.

Backend Development: Managing Data and Logic

1. Server-side Proficiency:

Backend Programming Languages: Utilize languages like Node.js, Python, Ruby, or Java to implement server-side logic and handle client requests.

Server Frameworks and Tools: Leverage frameworks such as Express.js, Django, or Ruby on Rails to expedite backend development and ensure scalability.

2. Effective Database Management:

Relational and Non-relational Databases: Employ databases like MySQL, PostgreSQL, MongoDB, or Firebase to store and manage structured and unstructured data efficiently.

API Development: Design and implement RESTful or GraphQL APIs to facilitate communication between the frontend and backend components of web applications.

3. Security and Performance Optimization:

Implement robust security measures to safeguard user data and protect against common vulnerabilities.

Optimize backend performance through techniques such as caching, query optimization, and load balancing, ensuring optimal application responsiveness.

Full Stack Development: Harmonizing Frontend and Backend

1. Seamless Integration of Technologies:

Cultivate expertise in both frontend and backend technologies to facilitate seamless communication and collaboration across the development stack.

Bridge the gap between user interface design and backend functionality to deliver cohesive and impactful web experiences.

2. Agile Project Management and Collaboration:

Collaborate effectively with cross-functional teams, including designers, product managers, and fellow developers, to plan, execute, and deploy web projects.

Utilize agile methodologies and version control systems like Git to streamline collaboration and track project progress efficiently.

3. Lifelong Learning and Adaptation:

Embrace a growth mindset and prioritize continuous learning to stay abreast of emerging technologies and industry best practices.

Engage with online communities, attend workshops, and pursue ongoing education opportunities to expand skill sets and remain competitive in the evolving field of web development.

Conclusion: Mastering full stack development requires a multifaceted skill set encompassing frontend design principles, backend architecture, and effective collaboration. By embracing a holistic approach to web development, full stack developers can craft immersive user experiences, optimize backend functionality, and navigate the complexities of modern web development with confidence and proficiency.

#full stack developer#education#information#full stack web development#front end development#frameworks#web development#backend#full stack developer course#technology

2 notes

·

View notes

Text

Firecrawl: Easy web data extraction for AI applications

As organizations increasingly rely on large language models (LLMs) to process web-based information, the challenge of converting unstructured websites into clean, analyzable formats has become critical. Firecrawl, an open-source web crawling and data extraction tool developed by Mendable, addresses this gap by providing a scalable solution to harvest and structure web content for AI applications.…

0 notes

Text

Impact of AI on Web Scraping Practices

Introduction

Owing to advancements in artificial intelligence (AI), the history of web scraping is a story of evolution towards efficiency in recent times. With an increasing number of enterprises and researchers relying on data extraction in deriving insights and making decisions, AI-enabled web scraping methods have transformed some of the traditional techniques into newer methods that are more efficient, more scalable, and more resistant to anti-scraping measures.

This blog discusses the effects of AI on web scraping, how AI-powered automation is changing the web scraping industry, the challenges being faced, and, ultimately, the road ahead for web scraping with AI.

How AI is Transforming Web Scraping

1. Enhanced Data Extraction Efficiency

Standard methods of scraping websites and information are rule-based extraction and rely on the script that anybody has created for that particular site, and it is hard-coded for that site and set of extraction rules. But in the case of web scraping using AI, such complexities are avoided, wherein the adaptation of the script happens automatically with a change in the structure of the websites, thus ensuring the same data extraction without rewriting the script constantly.

2. AI-Powered Web Crawlers

Machine learning algorithms enable web crawlers to mimic human browsing behavior, reducing the risk of detection. These AI-driven crawlers can:

Identify patterns in website layouts.

Adapt to dynamic content.

Handle complex JavaScript-rendered pages with ease.

3. Natural Language Processing (NLP) for Data Structuring

NLP helps in:

Extracting meaningful insights from unstructured text.

Categorizing and classifying data based on context.

Understanding sentiment and contextual relevance in customer reviews and news articles.

4. Automated CAPTCHA Solving

Many websites use CAPTCHAs to block bots. AI models, especially deep learning-based Optical Character Recognition (OCR) techniques, help bypass these challenges by simulating human-like responses.

5. AI in Anti-Detection Mechanisms

AI-powered web scraping integrates:

User-agent rotation to simulate diverse browsing behaviors.

IP Rotation & Proxies to prevent blocking.

Headless Browsers & Human-Like Interaction for bypassing bot detection.

Applications of AI in Web Scraping

1. E-Commerce Price Monitoring

AI scrapers help businesses track competitors' pricing, stock availability, and discounts in real-time, enabling dynamic pricing strategies.

2. Financial & Market Intelligence

AI-powered web scraping extracts financial reports, news articles, and stock market data for predictive analytics and trend forecasting.

3. Lead Generation & Business Intelligence

Automating the collection of business contact details, customer feedback, and sales leads through AI-driven scraping solutions.

4. Social Media & Sentiment Analysis

Extracting social media conversations, hashtags, and sentiment trends to analyze brand reputation and customer perception.

5. Healthcare & Pharmaceutical Data Extraction

AI scrapers retrieve medical research, drug prices, and clinical trial data, aiding healthcare professionals in decision-making.

Challenges in AI-Based Web Scraping

1. Advanced Anti-Scraping Technologies

Websites employ sophisticated detection methods, including fingerprinting and behavioral analysis.

AI mitigates these by mimicking real user interactions.

2. Data Privacy & Legal Considerations

Compliance with data regulations like GDPR and CCPA is essential.

Ethical web scraping practices ensure responsible data usage.

3. High Computational Costs

AI-based web scrapers require GPU-intensive resources, leading to higher operational costs.

Optimization techniques, such as cloud-based scraping, help reduce costs.

Future Trends in AI for Web Scraping

1. AI-Driven Adaptive Scrapers

Scrapers that self-learn and adjust to new website structures without human intervention.

2. Integration with Machine Learning Pipelines

Combining AI scrapers with data analytics tools for real-time insights.

3. AI-Powered Data Anonymization

Protecting user privacy by automating data masking and filtering.

4. Blockchain-Based Data Validation

Ensuring authenticity and reliability of extracted data using blockchain verification.

Conclusion

The addition of AI to the web scrape has made it smarter, flexible, and scalable as far as data extraction is concerned. The use of AIs for web scraping will help organizations navigate through anti-bot mechanisms, dynamic changes in websites, and unstructured data processing. Indeed, in the future, web scraping with AI will only be enhanced and more advanced to contribute further innovations in sectors across industries.

For organizations willing to embrace the power of data extraction with AI, CrawlXpert brings you state-of-the-art solutions designed for the present-day web scraping task. Get working with CrawlXpert right now in order to gain from AI-enabled quality automated web scraping solutions!

Know More : https://www.crawlxpert.com/blog/ai-on-web-scraping-practices

0 notes

Text

Top 5 Technologies Every Full Stack Development Learner Must Know

In today’s rapidly evolving digital landscape, Full Stack Development has emerged as a highly valued skill set. As companies strive to develop faster, smarter, and more scalable web applications, the demand for proficient full stack developers continues to grow. If you're planning to learn Full Stack Development in Pune or anywhere else, understanding the core technologies involved is crucial for a successful journey.

Whether you're starting your career, switching fields, or looking to enhance your technical expertise, being well-versed in both front-end and back-end technologies is essential. Many reputable institutes offer programs like the Java Programming Course with Placement to help learners bridge this knowledge gap and secure jobs right after training.

Let’s dive into the top 5 technologies every full stack development learner must know to thrive in this competitive field.

1. HTML, CSS, and JavaScript – The Front-End Trinity

Every aspiring full stack developer must begin with the basics. HTML, CSS, and JavaScript form the foundation of front-end development.

Why they matter:

HTML (HyperText Markup Language): Structures content on the web.

CSS (Cascading Style Sheets): Styles and enhances the appearance of web pages.

JavaScript: Adds interactivity and dynamic elements to web interfaces.

These three are the building blocks of modern web development. Without mastering them, it’s impossible to progress to more advanced technologies like frameworks and libraries.

🔹 Pro Tip: If you're learning full stack development in Pune, choose a program that emphasizes hands-on training in HTML, CSS, and JavaScript along with live projects.2. Java and Spring Boot – The Back-End Backbone

While there are many languages used for back-end development, Java remains one of the most in-demand. Known for its reliability and scalability, Java is often used in enterprise-level applications. Learning Java along with the Spring Boot framework is a must for modern backend development.

Why learn Java with Spring Boot?

Java is platform-independent and widely used across industries.

Spring Boot simplifies backend development, making it faster to develop RESTful APIs and microservices.

Integration with tools like Hibernate and JPA makes database interaction smoother.

Several institutes offer a Java Programming Course with Placement, ensuring that learners not only understand the theory but also get job-ready skills and employment opportunities.

3. Version Control Systems – Git and GitHub

Managing code, especially in team environments, is a key part of a developer's workflow. That’s where Git and GitHub come in.

Key Benefits:

Track changes efficiently with Git.

Collaborate on projects through GitHub repositories.

Create branches and pull requests to manage code updates seamlessly.

Version control is not optional. Every developer—especially full stack developers—must know how to work with Git from the command line as well as GitHub’s web interface.

🔹 Learners enrolled in a full stack development course in Pune often get dedicated modules on Git and version control, helping them work professionally on collaborative projects.

4. Databases – SQL & NoSQL

Full stack developers are expected to handle both front-end and back-end, and this includes the database layer. Understanding how to store, retrieve, and manage data is vital.

Must-know Databases:

MySQL/PostgreSQL (SQL databases): Ideal for structured data and relational queries.

MongoDB (NoSQL database): Great for unstructured or semi-structured data, and widely used with Node.js.

Understanding the difference between relational and non-relational databases helps developers pick the right tool for the right task. Courses that combine backend technologies with database management offer a more complete learning experience.

5. Frameworks and Libraries – React.js or Angular

Modern web development is incomplete without frameworks and libraries that enhance efficiency and structure. For front-end, React.js and Angular are two of the most popular choices.

Why use frameworks?

They speed up development by offering pre-built components.

Help in creating Single Page Applications (SPAs).

Ensure code reusability and maintainability.

React.js is often preferred for its flexibility and component-based architecture. Angular, backed by Google, offers a full-fledged MVC (Model-View-Controller) framework.

🔹 Many students who learn full stack development in Pune get to work on live projects using React or Angular, making their portfolios industry-ready.

Final Thoughts

To become a successful full stack developer, one must be comfortable with both the visible and behind-the-scenes aspects of web applications. From mastering HTML, CSS, and JavaScript, diving deep into Java and Spring Boot, to efficiently using Git, managing databases, and exploring modern frameworks—the journey is challenging but rewarding.

In cities like Pune, where tech opportunities are abundant, taking a structured learning path like a Java Programming Course with Placement or a full stack bootcamp is a smart move. These programs often include real-world projects, interview preparation, and job assistance to ensure you hit the ground running.

Quick Recap: Top Technologies to Learn

HTML, CSS & JavaScript – Core front-end skills

Java & Spring Boot – Robust backend development

Git & GitHub – Version control and collaboration

SQL & NoSQL – Efficient data management

React.js / Angular – Powerful front-end frameworks

If you're serious about making your mark in the tech industry, now is the time to learn full stack development in Pune. Equip yourself with the right tools, build a strong portfolio, and take that first step toward a dynamic and future-proof career.

0 notes

Text

Five Things You Didn’t Know About Unstructured Web

Get the most out of web data with our custom web scraping and crawling solutions. Our intelligent data extraction capabilities help in fetching data from complex websites with ease. Read more https://www.scrape.works/infographics/BigData/five-things-you-didnt-know-about-unstructured-web

0 notes

Text

Navigating the Complexity of Retail: Challenges and Opportunities for Food & Beverage Brands

In the ever-evolving landscape of the food and beverage industry, the pandemic has acted as a catalyst for significant trends that continue to shape the future of retail. From the surge in online shopping to the impact of inflationary pressures, the complexities facing Food & Beverage brands are both numerous and transformative. As we navigate these challenges, the need for change and evolution has become more crucial than ever, compelling retailers and consumers alike to adapt to the changing tides.

One of the most noticeable trends brought about by the pandemic is the skyrocketing demand for online shopping, and subsequently the massive growth of new and emerging brands. In the United States, food and beverage sales as a percentage of total retail e-commerce sales have risen from 9.3 percent in 2017 to nearly 14 percent in 2022. The trajectory is projected to continue its ascent, reaching an estimated 21.5 percent by 2027 as reported by Statista. This shift has reshaped the way brands approach their sales channels, emphasizing the importance of not only traditional in-store experiences but also the digital realm.

However, alongside these promising trends, a new set of challenges has emerged, largely driven by the current inflationary pressures. This has created a volatile environment where brands are forced to adapt and innovate. For emerging and new brands aspiring to flourish within the retail landscape, it’s crucial to navigate these complexities while maintaining a competitive edge.

A pivotal aspect of this adaptation involves managing the intricate web of sales channels. Brands must not only oversee their direct sales channels, such as Shopify, Amazon, and Walmart but also grapple with vast amounts of data from retail distributors and various retail platforms. Unfortunately, this data often arrives unstructured, sporting different formats, and plagued with inconsistencies. This lack of a holistic understanding makes it difficult for brands to assess the efficacy of their marketing efforts, promotions, and even the true cost of sampling.

for more details please visit our website - https://www.focusoutlook.com/navigating-the-complexity-of-retail-challenges-and-opportunities-for-food-beverage-brands/

0 notes

Text

Top 7 Use Cases of Web Scraping in E-commerce

In the fast-paced world of online retail, data is more than just numbers; it's a powerful asset that fuels smarter decisions and competitive growth. With thousands of products, fluctuating prices, evolving customer behaviors, and intense competition, having access to real-time, accurate data is essential. This is where internet scraping comes in.

Internet scraping (also known as web scraping) is the process of automatically extracting data from websites. In the e-commerce industry, it enables businesses to collect actionable insights to optimize product listings, monitor prices, analyze trends, and much more.

In this blog, we’ll explore the top 7 use cases of internet scraping, detailing how each works, their benefits, and why more companies are investing in scraping solutions for growth and competitive advantage.

What is Internet Scraping?

Internet scraping is the process of using bots or scripts to collect data from web pages. This includes prices, product descriptions, reviews, inventory status, and other structured or unstructured data from various websites. Scraping can be used once or scheduled periodically to ensure continuous monitoring. It’s important to adhere to data guidelines, terms of service, and ethical practices. Tools and platforms like TagX ensure compliance and efficiency while delivering high-quality data.

In e-commerce, this practice becomes essential for businesses aiming to stay agile in a saturated and highly competitive market. Instead of manually gathering data, which is time-consuming and prone to errors, internet scraping automates this process and provides scalable, consistent insights at scale.

Before diving into the specific use cases, it's important to understand why so many successful e-commerce companies rely on internet scraping. From competitive pricing to customer satisfaction, scraping empowers businesses to make informed decisions quickly and stay one step ahead in the fast-paced digital landscape.

Below are the top 7 Use cases of internet scraping.

1. Price Monitoring

Online retailers scrape competitor sites to monitor prices in real-time, enabling dynamic pricing strategies and maintaining competitiveness. This allows brands to react quickly to price changes.

How It Works

It is programmed to extract pricing details for identical or similar SKUs across competitor sites. The data is compared to your product catalog, and dashboards or alerts are generated to notify you of changes. The scraper checks prices across various time intervals, such as hourly, daily, or weekly, depending on the market's volatility. This ensures businesses remain up-to-date with any price fluctuations that could impact their sales or profit margins.

Benefits of Price Monitoring

Competitive edge in pricing

Avoids underpricing or overpricing

Enhances profit margins while remaining attractive to customers

Helps with automatic repricing tools

Allows better seasonal pricing strategies

2. Product Catalog Optimization

Scraping competitor and marketplace listings helps optimize your product catalog by identifying missing information, keyword trends, or layout strategies that convert better.

How It Works

Scrapers collect product titles, images, descriptions, tags, and feature lists. The data is analyzed to identify gaps and opportunities in your listings. AI-driven catalog optimization tools use this scraped data to recommend ideal product titles, meta tags, and visual placements. Combining this with A/B testing can significantly improve your conversion rates.

Benefits

Better product visibility

Enhanced user experience and conversion rates

Identifies underperforming listings

Helps curate high-performing metadata templates

3. Competitor Analysis

Internet scraping provides detailed insights into your competitors’ strategies, such as pricing, promotions, product launches, and customer feedback, helping to shape your business approach.

How It Works

Scraped data from competitor websites and social platforms is organized and visualized for comparison. It includes pricing, stock levels, and promotional tactics. You can monitor their advertising frequency, ad types, pricing structure, customer engagement strategies, and feedback patterns. This creates a 360-degree understanding of what works in your industry.

Benefits

Uncover competitive trends

Benchmark product performance

Inform marketing and product strategy

Identify gaps in your offerings

Respond quickly to new product launches

4. Customer Sentiment Analysis

By scraping reviews and ratings from marketplaces and product pages, businesses can evaluate customer sentiment, discover pain points, and improve service quality.

How It Works

Natural language processing (NLP) is applied to scraped review content. Positive, negative, and neutral sentiments are categorized, and common themes are highlighted. Text analysis on these reviews helps detect not just satisfaction levels but also recurring quality issues or logistics complaints. This can guide product improvements and operational refinements.

Benefits

Improve product and customer experience

Monitor brand reputation

Address negative feedback proactively

Build trust and transparency

Adapt to changing customer preferences

5. Inventory and Availability Tracking

Track your competitors' stock levels and restocking schedules to predict demand and plan your inventory efficiently.

How It Works

Scrapers monitor product availability indicators (like "In Stock", "Out of Stock") and gather timestamps to track restocking frequency. This enables brands to respond quickly to opportunities when competitors go out of stock. It also supports real-time alerts for critical stock thresholds.

Benefits

Avoid overstocking or stockouts

Align promotions with competitor shortages

Streamline supply chain decisions

Improve vendor negotiation strategies

Forecast demand more accurately

6. Market Trend Identification

Scraping data from marketplaces and social commerce platforms helps identify trending products, search terms, and buyer behaviors.

How It Works

Scraped data from platforms like Amazon, eBay, or Etsy is analyzed for keyword frequency, popularity scores, and rising product categories. Trends can also be extracted from user-generated content and influencer reviews, giving your brand insights before a product goes mainstream.

Benefits

Stay ahead of consumer demand

Launch timely product lines

Align campaigns with seasonal or viral trends

Prevent dead inventory

Invest confidently in new product development

7. Lead Generation and Business Intelligence

Gather contact details, seller profiles, or niche market data from directories and B2B marketplaces to fuel outreach campaigns and business development.

How It Works

Scrapers extract publicly available email IDs, company names, product listings, and seller ratings. The data is filtered based on industry and size. Lead qualification becomes faster when you pre-analyze industry relevance, product categories, or market presence through scraped metadata.

Benefits

Expand B2B networks

Targeted marketing efforts

Increase qualified leads and partnerships

Boost outreach accuracy

Customize proposals based on scraped insights

How Does Internet Scraping Work in E-commerce?

Target Identification: Identify the websites and data types you want to scrape, such as pricing, product details, or reviews.

Bot Development: Create or configure a scraper bot using tools like Python, BeautifulSoup, or Scrapy, or use advanced scraping platforms like TagX.

Data Extraction: Bots navigate web pages, extract required data fields, and store them in structured formats (CSV, JSON, etc.).

Data Cleaning: Filter, de-duplicate, and normalize scraped data for analysis.

Data Analysis: Feed clean data into dashboards, CRMs, or analytics platforms for decision-making.

Automation and Scheduling: Set scraping frequency based on how dynamic the target sites are.

Integration: Sync data with internal tools like ERP, inventory systems, or marketing automation platforms.

Key Benefits of Internet Scraping for E-commerce

Scalable Insights: Access large volumes of data from multiple sources in real time

Improved Decision Making: Real-time data fuels smarter, faster decisions

Cost Efficiency: Reduces the need for manual research and data entry

Strategic Advantage: Gives brands an edge over slower-moving competitors

Enhanced Customer Experience: Drives better content, service, and personalization

Automation: Reduces human effort and speeds up analysis

Personalization: Tailor offers and messaging based on real-world competitor and customer data

Why Businesses Trust TagX for Internet Scraping

TagX offers enterprise-grade, customizable internet scraping solutions specifically designed for e-commerce businesses. With compliance-first approaches and powerful automation, TagX transforms raw online data into refined insights. Whether you're monitoring competitors, optimizing product pages, or discovering market trends, TagX helps you stay agile and informed.

Their team of data engineers and domain experts ensures that each scraping task is accurate, efficient, and aligned with your business goals. Plus, their built-in analytics dashboards reduce the time from data collection to actionable decision-making.

Final Thoughts

E-commerce success today is tied directly to how well you understand and react to market data. With internet scraping, brands can unlock insights that drive pricing, inventory, customer satisfaction, and competitive advantage. Whether you're a startup or a scaled enterprise, the smart use of scraping technology can set you apart.

Ready to outsmart the competition? Partner with TagX to start scraping smarter.

0 notes

Text

Data Science Trending in 2025

What is Data Science?

Data Science is an interdisciplinary field that combines scientific methods, processes, algorithms, and systems to extract knowledge and insights from structured and unstructured data. It is a blend of various tools, algorithms, and machine learning principles with the goal to discover hidden patterns from raw data.

Introduction to Data Science

In the digital era, data is being generated at an unprecedented scale—from social media interactions and financial transactions to IoT sensors and scientific research. This massive amount of data is often referred to as "Big Data." Making sense of this data requires specialized techniques and expertise, which is where Data Science comes into play.

Data Science enables organizations and researchers to transform raw data into meaningful information that can help make informed decisions, predict trends, and solve complex problems.

History and Evolution

The term "Data Science" was first coined in the 1960s, but the field has evolved significantly over the past few decades, particularly with the rise of big data and advancements in computing power.

Early days: Initially, data analysis was limited to simple statistical methods.

Growth of databases: With the emergence of databases, data management and retrieval improved.

Rise of machine learning: The integration of algorithms that can learn from data added a predictive dimension.

Big Data Era: Modern data science deals with massive volumes, velocity, and variety of data, leveraging distributed computing frameworks like Hadoop and Spark.

Components of Data Science

1. Data Collection and Storage

Data can come from multiple sources:

Databases (SQL, NoSQL)

APIs

Web scraping

Sensors and IoT devices

Social media platforms

The collected data is often stored in data warehouses or data lakes.

2. Data Cleaning and Preparation

Raw data is often messy—containing missing values, inconsistencies, and errors. Data cleaning involves:

Handling missing or corrupted data

Removing duplicates

Normalizing and transforming data into usable formats

3. Exploratory Data Analysis (EDA)

Before modeling, data scientists explore data visually and statistically to understand its main characteristics. Techniques include:

Summary statistics (mean, median, mode)

Data visualization (histograms, scatter plots)

Correlation analysis

4. Data Modeling and Machine Learning

Data scientists apply statistical models and machine learning algorithms to:

Identify patterns

Make predictions

Classify data into categories

Common models include regression, decision trees, clustering, and neural networks.

5. Interpretation and Communication

The results need to be interpreted and communicated clearly to stakeholders. Visualization tools like Tableau, Power BI, or matplotlib in Python help convey insights effectively.

Techniques and Tools in Data Science

Statistical Analysis

Foundational for understanding data properties and relationships.

Machine Learning

Supervised and unsupervised learning for predictions and pattern recognition.

Deep Learning

Advanced neural networks for complex tasks like image and speech recognition.

Natural Language Processing (NLP)

Techniques to analyze and generate human language.

Big Data Technologies

Hadoop, Spark, Kafka for handling massive datasets.

Programming Languages

Python: The most popular language due to its libraries like pandas, NumPy, scikit-learn.

R: Preferred for statistical analysis.

SQL: For database querying.

Applications of Data Science

Data Science is used across industries:

Healthcare: Predicting disease outbreaks, personalized medicine, medical image analysis.

Finance: Fraud detection, credit scoring, algorithmic trading.

Marketing: Customer segmentation, recommendation systems, sentiment analysis.

Manufacturing: Predictive maintenance, supply chain optimization.

Transportation: Route optimization, autonomous vehicles.

Entertainment: Content recommendation on platforms like Netflix and Spotify.

Challenges in Data Science

Data Quality: Poor data can lead to inaccurate results.

Data Privacy and Ethics: Ensuring responsible use of data and compliance with regulations.

Skill Gap: Requires multidisciplinary knowledge in statistics, programming, and domain expertise.

Scalability: Handling and processing vast amounts of data efficiently.

Future of Data Science

The future promises further integration of artificial intelligence and automation in data science workflows. Explainable AI, augmented analytics, and real-time data processing are areas of rapid growth.

As data continues to grow exponentially, the importance of data science in guiding strategic decisions and innovation across sectors will only increase.

Conclusion

Data Science is a transformative field that unlocks the power of data to solve real-world problems. Through a combination of techniques from statistics, computer science, and domain knowledge, data scientists help organizations make smarter decisions, innovate, and gain a competitive edge.

Whether you are a student, professional, or business leader, understanding data science and its potential can open doors to exciting opportunities and advancements in technology and society.

0 notes

Text

The Role of ITLytics in Driving Business Intelligence and Performance Optimization

Data analytics is the future, and the future is NOW! Every mouse click, keyboard button press, swipe, or tap is used to shape business decisions. Everything is about data these days. Data is information, and information is power.” – Radi, Data Analyst at CENTOGENE

Data is at the heart of every successful business strategy now. As a result, organizations are increasingly turning to next-gen data analytics platforms that transform raw information into actionable intelligence.

And rightly so.

Recent market trends show that data-driven companies experience four times more revenue growth compared to those not using analytics, pushing decision-makers to invest in intelligent platforms that fuel real-time insights, productivity, and growth.

In this article, we will discuss one such platform, ITLytics, a powerful web-based Data Analytics Platform that empowers organizations to accelerate business intelligence and performance optimization. Let’s dive in.

ITLytics: A Brief Introduction

ITLytics is a predictive analytics tool that is transforming the way businesses analyze, visualize, and act on their data. Built to be intuitive, scalable, and fully customizable, this domain-agnostic data analytics and visualization platform empowers organizations to extract deep insights from both structured and unstructured data.

Combining the flexibility of open-source architecture with enterprise-grade analytics capabilities, ITLytics delivers measurable value, offering zero licensing fees, a 25% reduction in implementation costs, and a 20% improvement in decision-making effectiveness.

Noteworthy Features of ITLytics

A great data analytics platform is only as strong as the features it offers, and ITLytics delivers on every front. Here’s a list of its top features:

Configurable Visualizations: This tool offers pre-designed templates that simplify and accelerate the process of generating insightful reports. Users can configure charts, customize visual themes, and create impactful dashboards without writing a single line of code.

Dynamic Dashboard: The platform’s real-time dashboard allows for seamless data drill-downs, letting users interact with data on a granular level. The dynamic layout helps stakeholders monitor KPIs, detect anomalies, and make quick business decisions.

Data Source Support: It supports a broad range of data sources, from MSSQL and live data connections to both on-premises and cloud-based infrastructure. This versatility makes the platform suitable for heterogeneous enterprise environments.

Open-Source Flexibility: Unlike other tools that require per-user subscriptions (like Power BI or Tableau), ITLytics is open-source and free to use. Its zero-licensing fee model makes it significantly more affordable without compromising functionality.

Mobile Compatibility: Responsive dashboards and native apps enable mobile users to access data anytime, anywhere, ideal for today’s mobile-first workforce.

Remarkable Benefits of Using ITLytics

The power of ITLytics doesn’t stop at its feature set. Its tangible business benefits make it a preferred choice for decision-makers seeking to enhance performance and business intelligence. Some of its major advantages include:

Better Business Decision-making With a reported 20% improvement in decision-making, businesses using this tool benefit from faster, more data-informed strategic moves.

High Performance with Large Datasets Whether deployed on the cloud or on-premises, ITLytics is optimized for performance. It handles large datasets efficiently, making it suitable for enterprises dealing with big data challenges.

Cost-effective Deployment It delivers enterprise-level performance at lower deployment costs. With no licensing costs and an estimated 25% savings on implementation, it's a smart choice for businesses looking to cut overheads.

Industry Agnostic As a domain-independent solution, it can be seamlessly applied across industries such as construction, BFSI, healthcare, logistics, and more.

AI Integration With support for Python, R, and Azure ML, ITLytics acts as a Predictive Analytics Tool that supports forecasting, error detection, and decision modeling.

Community Support A strong community ecosystem ensures access to online resources, troubleshooting forums, and technical documentation.

Diverse Industries That Benefit from ITLytics

Data-driven strategies are essential for driving important business decisions across industries. Here’s how different sectors can leverage this tool:

Manufacturing With its intelligent data dashboards, ITLytics can assist manufacturers in tracking inventory levels, optimizing pricing models, and maintaining strict quality control. It can enable deeper analysis of production KPIs, highlight inefficiencies, and support preventive maintenance by identifying patterns in operational data, ultimately leading to reduced costs and higher output efficiency.

Logistics and Supply Chain For logistics and supply chain enterprises, ITLytics offers real-time visibility into complex operations. It helps identify shipment delays, streamline inventory management, and optimize delivery routes. With accurate forecasting and data-driven insights, companies can reduce lead times and improve cost-effectiveness.

Retail Retailers can gain a 360-degree view of customer behavior, sales performance, and inventory turnover using this data platform. It supports dynamic pricing, targeted marketing, and demand forecasting, while also detecting potential fraud. The result is improved customer experiences and data-backed strategic planning for growth.

Why ITLytics Stands Out as a Data Analytics Platform

In a market crowded with Data Analysis Software and Business Intelligence Tools, ITLytics distinguishes itself through a compelling mix of affordability, scalability, and versatility. Here’s why it stands out:

Zero Licensing Costs: Most platforms charge per user, but ITLytics removes this barrier entirely, offering a more inclusive and scalable approach

Customization and Flexibility: As an open-source solution, this platform allows businesses to modify dashboards, reports, and integrations based on unique organizational requirements

AI and Predictive Modeling: With built-in support for AI frameworks and machine learning libraries, ITLytics not only analyzes historical data but also forecasts future trends

Drag-and-Drop Interface: Simplifies the user experience by allowing non-technical users to build dashboards and run queries with ease

Superior Data Visualization Software: With sleek charts, graphs, and infographics, it offers storytelling through data, helping stakeholders grasp complex information quickly

ERP and Legacy Integration: Its ability to integrate with legacy systems and ERPs ensures continuity of operations without the need for massive infrastructure overhauls

Conclusion: Empower Smarter Business Decisions with ITLytics

As organizations worldwide face growing pressure to become more data-driven, having the right Data Analytics Platform is no longer optional, it’s critical. ITLytics rises to this challenge by providing a powerful, cost-effective, and scalable solution that drives business intelligence and performance optimization across industries. It’s not just a platform; it’s a strategic enabler in the digital transformation journey. For decision-makers ready to turn their data into actionable intelligence, ITLytics is the key to unlocking operational excellence and long-term success.

Talk to us today to start unlocking the true potential of your data with ITLytics, our powerful, open-source platform designed to enhance business intelligence and performance optimization!

For details on how ITLytics can transform your data strategy, click here: https://www.1point1.com/product/itlytics

Have questions or need a demo? Call us at: 022 66873803 or drop a mail at [email protected]

1 note

·

View note

Text

Cloud Database and DBaaS Market in the United States entering an era of unstoppable scalability

Cloud Database And DBaaS Market was valued at USD 17.51 billion in 2023 and is expected to reach USD 77.65 billion by 2032, growing at a CAGR of 18.07% from 2024-2032.

Cloud Database and DBaaS Market is experiencing robust expansion as enterprises prioritize scalability, real-time access, and cost-efficiency in data management. Organizations across industries are shifting from traditional databases to cloud-native environments to streamline operations and enhance agility, creating substantial growth opportunities for vendors in the USA and beyond.

U.S. Market Sees High Demand for Scalable, Secure Cloud Database Solutions

Cloud Database and DBaaS Market continues to evolve with increasing demand for managed services, driven by the proliferation of data-intensive applications, remote work trends, and the need for zero-downtime infrastructures. As digital transformation accelerates, businesses are choosing DBaaS platforms for seamless deployment, integrated security, and faster time to market.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/6586

Market Keyplayers:

Google LLC (Cloud SQL, BigQuery)

Nutanix (Era, Nutanix Database Service)

Oracle Corporation (Autonomous Database, Exadata Cloud Service)

IBM Corporation (Db2 on Cloud, Cloudant)

SAP SE (HANA Cloud, Data Intelligence)

Amazon Web Services, Inc. (RDS, Aurora)

Alibaba Cloud (ApsaraDB for RDS, ApsaraDB for MongoDB)

MongoDB, Inc. (Atlas, Enterprise Advanced)

Microsoft Corporation (Azure SQL Database, Cosmos DB)

Teradata (VantageCloud, ClearScape Analytics)

Ninox (Cloud Database, App Builder)

DataStax (Astra DB, Enterprise)

EnterpriseDB Corporation (Postgres Cloud Database, BigAnimal)

Rackspace Technology, Inc. (Managed Database Services, Cloud Databases for MySQL)

DigitalOcean, Inc. (Managed Databases, App Platform)

IDEMIA (IDway Cloud Services, Digital Identity Platform)

NEC Corporation (Cloud IaaS, the WISE Data Platform)

Thales Group (CipherTrust Cloud Key Manager, Data Protection on Demand)

Market Analysis

The Cloud Database and DBaaS Market is being shaped by rising enterprise adoption of hybrid and multi-cloud strategies, growing volumes of unstructured data, and the rising need for flexible storage models. The shift toward as-a-service platforms enables organizations to offload infrastructure management while maintaining high availability and disaster recovery capabilities.

Key players in the U.S. are focusing on vertical-specific offerings and tighter integrations with AI/ML tools to remain competitive. In parallel, European markets are adopting DBaaS solutions with a strong emphasis on data residency, GDPR compliance, and open-source compatibility.

Market Trends

Growing adoption of NoSQL and multi-model databases for unstructured data

Integration with AI and analytics platforms for enhanced decision-making

Surge in demand for Kubernetes-native databases and serverless DBaaS

Heightened focus on security, encryption, and data governance

Open-source DBaaS gaining traction for cost control and flexibility

Vendor competition intensifying with new pricing and performance models

Rise in usage across fintech, healthcare, and e-commerce verticals

Market Scope

The Cloud Database and DBaaS Market offers broad utility across organizations seeking flexibility, resilience, and performance in data infrastructure. From real-time applications to large-scale analytics, the scope of adoption is wide and growing.

Simplified provisioning and automated scaling

Cross-region replication and backup

High-availability architecture with minimal downtime

Customizable storage and compute configurations

Built-in compliance with regional data laws

Suitable for startups to large enterprises

Forecast Outlook

The market is poised for strong and sustained growth as enterprises increasingly value agility, automation, and intelligent data management. Continued investment in cloud-native applications and data-intensive use cases like AI, IoT, and real-time analytics will drive broader DBaaS adoption. Both U.S. and European markets are expected to lead in innovation, with enhanced support for multicloud deployments and industry-specific use cases pushing the market forward.

Access Complete Report: https://www.snsinsider.com/reports/cloud-database-and-dbaas-market-6586

Conclusion

The future of enterprise data lies in the cloud, and the Cloud Database and DBaaS Market is at the heart of this transformation. As organizations demand faster, smarter, and more secure ways to manage data, DBaaS is becoming a strategic enabler of digital success. With the convergence of scalability, automation, and compliance, the market promises exciting opportunities for providers and unmatched value for businesses navigating a data-driven world.

Related reports:

U.S.A leads the surge in advanced IoT Integration Market innovations across industries

U.S.A drives secure online authentication across the Certificate Authority Market

U.S.A drives innovation with rapid adoption of graph database technologies

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

Mail us: [email protected]

#Cloud Database and DBaaS Market#Cloud Database and DBaaS Market Growth#Cloud Database and DBaaS Market Scope

0 notes

Text

Unlocking Business Potential: The Transformative Power of Data Entry Services

In nowadays’s statistics-pushed international, corporations rely closely on accurate, organized, and reachable facts to make strategic decisions, streamline operations, and stay ahead of the competition. However, coping with sizeable quantities of information may be overwhelming, especially for businesses juggling multiple priorities. This is where professional data entry services come in, imparting a continuing answer to transform raw statistics into a treasured asset.

By outsourcing information entry, businesses can liberate performance, reduce errors, and awareness on their middle goals, paving the manner for sustainable boom. This blog delves into the transformative effect of statistics entry services, their blessings, and why they're a should-have for companies aiming to thrive in a aggressive panorama.

What Are Data Entry Services?

Data data services encompass the method of amassing, inputting, organizing, and managing statistics into virtual structures, which include databases, spreadsheets, or specialized software. These services cover a variety of tasks, such as:

Data Entry: Inputting facts from physical files, paperwork, or virtual resources into structured formats.

Data Cleansing: Correcting mistakes, eliminating duplicates, and standardizing records for consistency.

Data Conversion: Transforming statistics from one format to any other, such as from paper information to digital files or PDFs to Excel.

Data Extraction: Retrieving unique facts from unstructured resources like web sites, reports, or pix.

Data Validation: Verifying facts accuracy to make certain reliability and usability.

Delivered via professional professionals or specialised businesses, these offerings leverage superior gear like optical character popularity (OCR), automation software program, and cloud-based structures to make certain precision, velocity, and security. Tailored to meet the unique needs of organizations, data entry services are a cornerstone of efficient records control.

Why Data Entry Services Are Essential

Data is the lifeblood of current businesses, driving the whole thing from consumer insights to operational performance. However, handling statistics manually can result in mistakes, inefficiencies, and overlooked possibilities. Here’s why professional statistics access services are necessary:

Unmatched Accuracy Manual statistics access is liable to errors like typos, missing entries, or incorrect formatting, which can cause highly-priced errors. Professional facts access services integrate human information with automated gear to deliver mistakes-loose effects, ensuring your facts is dependable and actionable.

Time and Resource Savings Data access is a time-consuming mission which can divert cognizance from strategic priorities. By outsourcing, agencies free up their groups to concentrate on high-fee activities like innovation, advertising and marketing, or purchaser engagement, boosting typical productivity.

Cost Efficiency Hiring in-residence workforce or making an investment in superior data control equipment can strain budgets, mainly for small and medium-sized companies. Outsourcing data entry eliminates these fees, offering a fee-powerful answer with out compromising great.

Enhanced Data Security With cyber threats on the upward push, protecting sensitive information is essential. Reputable statistics entry providers put into effect sturdy safety features, including encryption, steady servers, and strict access controls, to safeguard your facts.

Scalability for Growth Businesses frequently face fluctuating data needs, consisting of at some stage in product launches or seasonal peaks. Professional information entry services offer the power to scale operations up or down, ensuring performance without useless costs.

Industries Benefiting from Data Entry Services

Data entry services are versatile, delivering value across a wide range of industries:

E-commerce: Streamlining product catalogs, pricing updates, and order processing for seamless online operations.

Healthcare: Digitizing patient records, managing billing, and ensuring compliance with regulatory standards.

Finance: Handling invoices, transaction records, and financial reports with precision.

Logistics: Tracking shipments, managing inventory, and optimizing supply chain data.

Retail: Maintaining customer databases, loyalty programs, and sales analytics for personalized experiences.

Real Estate: Organizing property listings, contracts, and client data for efficient transactions.

By addressing industry-specific challenges, data entry services empower businesses to operate more effectively and stay competitive.

Visualizing the Transformation

Picture a business buried under piles of paper documents, struggling with inconsistent data and frustrated employees. Now, imagine a streamlined digital ecosystem where data is organized, accurate, and instantly accessible. This is the transformative power of professional data entry services.

By converting chaotic data into structured insights, these services enable businesses to:

Make informed decisions based on reliable data.

Enhance customer satisfaction with accurate and timely information.

Streamline workflows by eliminating manual errors and bottlenecks.

Maintain compliance with industry regulations through secure data handling.

The result is a clear, organized, and efficient operation that drives growth and success.

How to Choose the Right Data Entry Partner

Selecting the right data entry service provider is key to maximizing benefits. Consider these factors when making your choice:

Industry Experience: Choose a provider with a proven track record in your sector for relevant expertise.

Quality Control: Ensure the provider has robust processes for error-checking and data validation.

Security Measures: Verify compliance with data protection standards, including encryption and secure access.

Scalability: Opt for a provider that can adapt to your changing needs, from small projects to large-scale operations.

Transparent Pricing: Look for cost-effective solutions with clear pricing and no hidden fees.

Customer Support: Select a provider with responsive support to address concerns promptly.

Conclusion

Professional data entry services are more than just a support function—they are a strategic tool for unlocking business potential. By ensuring accuracy, saving time, and enhancing security, these services empower businesses to focus on innovation and growth. From small startups to large enterprises, outsourcing data entry provides the flexibility, expertise, and efficiency needed to thrive in a competitive landscape.

If you’re ready to transform your data management and streamline your operations, partnering with a professional data entry provider is the way forward. Embrace the power of organized data and watch your business soar to new heights in today’s data-driven world.

0 notes